Together with our processing experts, we have developed quality control criteria for the entire survey area after each processing step

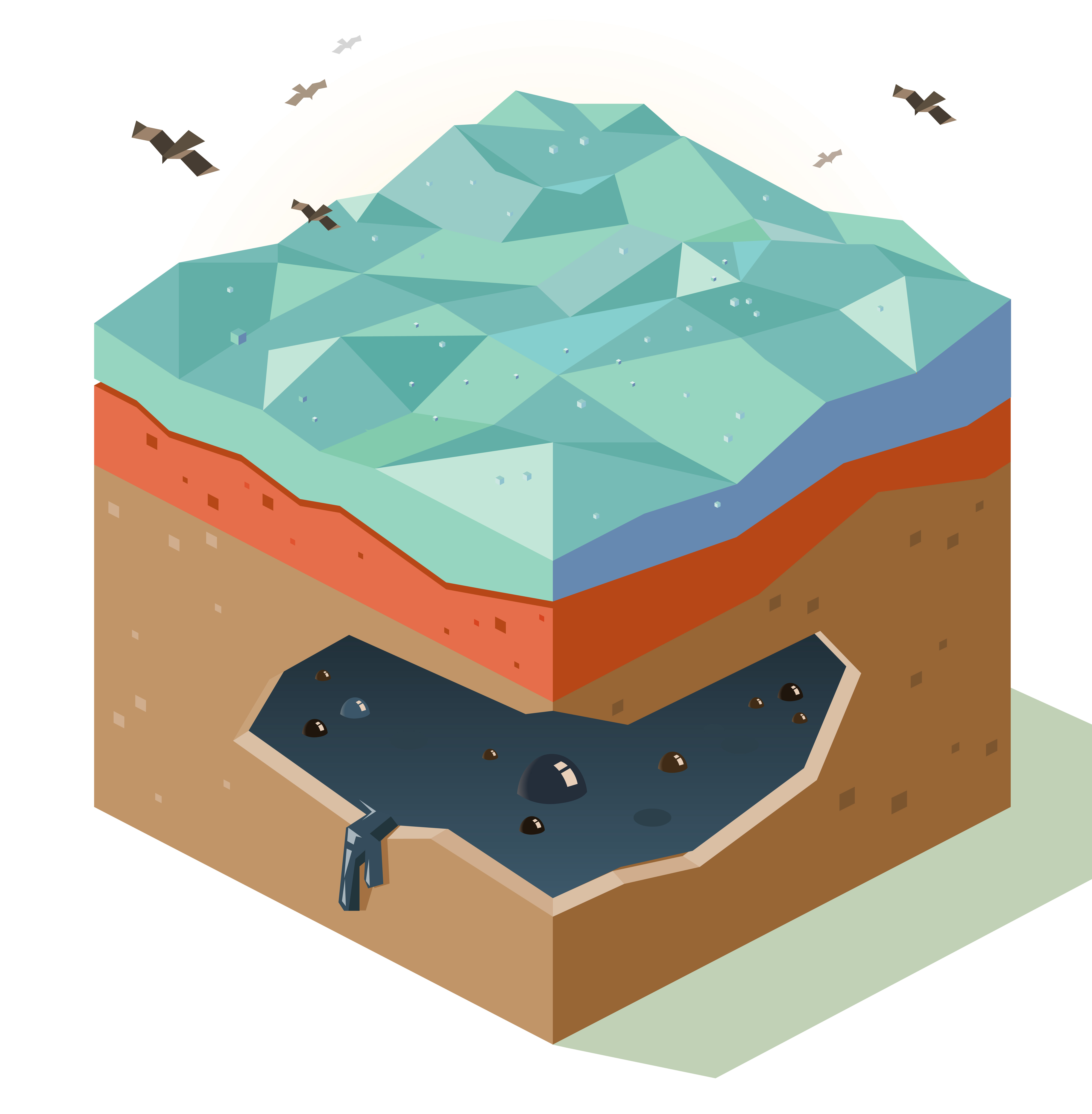

The first step in finding an oil reservoir is building an accurate picture of the subsurface by raw seismic data. We've created a set of deep learning models, covering a part of a typical seismic processing pipeline, such as first break picking and geometry assessment. Also, we've developed and implemented a number of quantitive metrics to automatically perform quality control of the results after each processing step.